Archive

As new versions of VoltDB become available, you will want to upgrade the VoltDB software on your database cluster. The simplest approach for upgrading recent versions of VoltDB — V6.8 or later — is to perform an orderly shutdown saving a final snapshot, upgrade the software on all servers, then re-start the database. (If you are upgrading from earlier versions of the software, you can still upgrade using a snapshot. But you will need to perform the save and restore operations manually.)

However, upgrading using snapshots involves downtime while the software is being updated. An alternative is to use database replication (DR) — either passive DR or cross data center replication (XDCR) — to upgrade with minimal or no downtime.

Using passive DR you can copy the active database contents to a new cluster, then switch the application clients to point to the new server. The advantage of this process is that the only downtime the business application sees is the time needed to promote the new cluster and redirect the clients.

Using cross data center replication (XDCR), it is possible to perform an online upgrade, where there is no downtime and the database is accessible throughout the upgrade operation. If two or more clusters are already active participants in an XDCR environment, you can shutdown and upgrade the clusters, one at a time, to perform the upgrade leaving at least one cluster available at all times.

You can also use XDCR to upgrade a cluster, with limited extra hardware, by operationally splitting the cluster into two. Although this approach does not require downtime, it does reduce the K-safety for the duration of the upgrade.

The following sections describe the five approaches to upgrading VoltDB software:

Upgrading the VoltDB software on a single database cluster is easy. All you need to do is perform an orderly shutdown saving a final snapshot, upgrade the VoltDB software on all servers in the cluster, then restart the database. The steps to perform this procedure are:

Shutdown the database and save a final snapshot (voltadmin shutdown --save).

Upgrade VoltDB on all cluster nodes.

Restart the database (voltdb start).

This process works for any recent (V6.8 or later) release of VoltDB.

To upgrade older versions of VoltDB software (prior to V6.8), you must perform the save and restore operations manually. The steps when upgrading from older versions of VoltDB are:

Place the database in admin mode (voltadmin pause).

Perform a manual snapshot of the database (voltadmin save --blocking).

Shutdown the database (voltadmin shutdown).

Upgrade VoltDB on all cluster nodes.

Re-initialize the root directory on all nodes (voltdb init --force).

Start a new database in admin mode (voltdb start --pause).

Restore the snapshot created in Step #2 (voltadmin restore).

Return the database to normal operations (voltadmin resume).

When upgrading the VoltDB software in a production environment, it is possible to minimize the disruption to client applications by upgrading across two clusters using passive database replication (DR). To use this process you need a second database cluster to act as the DR replica and you must have a unique cluster ID assigned to the current database.

The basic process for upgrading the VoltDB software using DR is to:

Install the new VoltDB software on the secondary cluster

Use passive DR to synchronize the database contents from the current cluster to the new cluster

Pause the current database and promote the new cluster, switching the application clients to the new upgraded database

The following sections describe in detail the prerequisites for using this process, the steps to follow, and — in case there are any issues with the updated database — the process for falling back to the previous software version.

The prerequisites for using DR to upgrade VoltDB are:

A second cluster with the same configuration (that is, the same number of servers and sites per host) as the current database cluster.

The current database cluster must have a unique cluster ID assigned in its deployment file.

The cluster ID is assigned in the <dr> section of the deployment file and

must be set when the cluster starts. It cannot be added or altered while the database is running. So if you are

considering using this process for upgrading your production systems, be sure to add a <dr> tag to the deployment and assign a unique cluster ID when starting the database,

even if you do not plan on using DR for normal operations.

For example, you would add the following element to the deployment file when starting your primary database cluster to assign it the unique ID of 3.

<dr id="3">

An important constraint to be aware of when using this process is that you must not make any schema changes during the upgrade process. This includes the period after the upgrade while you verify the application's proper operation on the new software version. If any changes are made to the schema, you may not be able to readily fall back to the previous version.

The procedure for upgrading the VoltDB software on a running database using DR is the following. In the examples,

we assume the existing database is running on a cluster with the nodes oldsvr1 and

oldsvr2 and the new cluster includes servers newsvr1 and

newsvr2. We will assign the clusters unique IDs 3 and 4, respectively.

Install the new VoltDB software on the secondary cluster.

Follow the steps in the section "Installing VoltDB" in the Using VoltDB manual to install the latest VoltDB software.

Start the second cluster as a replica of the current database cluster.

Once the new software is installed, create a new database on the secondary server using the voltdb init --force and voltdb start commands and including the necessary DR configuration to create a replica of the current database. For example, the configuration file on the new cluster might look like this:

<dr id="4" role="replica"> <connection source="oldsvr1,oldsvr2"/> </dr>

Once the second cluster starts, apply the schema from the current database to the second cluster. Once the schema match on the two databases, replication will begin.

Wait for replication to stabilize.

During replication, the original database will send a snapshot of the current content to the new replica, then send binary logs of all subsequent transactions. You want to wait until the snapshot is finished and the ongoing DR is processing normally before proceeding.

First monitor the DR statistics on the new cluster. The DR consumer state changes to "RECEIVE" once the snapshot is complete. You can check this in the Monitor tab of the VoltDB Management Center or from the command line by using sqlcmd to call the @Statistics system procedure, like so:

$ sqlcmd --servers=newsvr1 1> exec @Statistics drconsumer 0;

Once the new cluster reports the consumer state as "RECEIVE", you can monitor the rate of replication on the existing database cluster using the DR producer statistics. Again, you can view these statistics in the Monitor tab of the VoltDB Management Center or by calling @Statistics using sqlcmd:

$ sqlcmd --servers=oldsvr1 1> exec @Statistics drproducer 0;

What you are looking for on the producer side is that the DR latency is low; ideally under a second. Because the DR latency helps determine how long you will wait for the cluster to quiesce when you pause it and, subsequently, how long the client applications will be stalled waiting for the new cluster to be promoted. You determine the latency by looking at the difference between the statistics for the last queued timestamp and the last ACKed timestamp. The difference between these values gives you the latency in microseconds. When the latency reaches a stable, low value you are ready to proceed.

Pause the current database.

The next step is to pause the current database. You do this using the voltadmin pause --wait command:

$ voltadmin pause --host=oldsvr1 --wait

The --wait flag tells voltadmin to wait until all DR and export queues are flushed to their downstream targets before returning control to the shell prompt. This guarantees that all transactions have reached the new replica cluster.

If DR or export are blocked for any reason — such as a network outage or the target server unavailable — the voltadmin pause --wait command will continue to wait and periodically report on what queues are still busy. If the queues do not progress, you will want to fix the underlying problem before proceeding to ensure you do not lose any data.

Promote the new database.

Once the current database is fully paused, you can promote the new database, using the voltadmin promote command:

$ voltadmin promote --host=newsvr1

At this point, your database is up and running on the new VoltDB software version.

Redirect client applications to the new database.

To restore connectivity to your client applications, redirect them from the old cluster to the new cluster by creating connections to the new cluster servers newsvr1, newsvr2, and so on.

Shutdown the original cluster.

At this point you can shutdown the old database cluster.

Verify proper operation of the database and client applications.

The last step is to verify that your applications are operating properly against the new VoltDB software. Use the VoltDB Management Center to monitor database transactions and performance and verify transactions are completing at the expected rate and volume.

Your upgrade is now complete. If, at any point, you decide there is an issue with your application or your database, it is possible to fall back to the previous version of VoltDB as long as you have not made any changes to the underlying database schema. The next section explains how to fall back when necessary.

In extreme cases, you may find there is an issue with your application and the latest version of VoltDB. Of course, you normally would discover this during testing prior to a production upgrade. However, if that is not the case and an incompatibility or other conflict is discovered after the upgrade is completed, it is possible to fall back to a previous version of VoltDB. The basic process for falling back is to the following:

If any problems arise before Step #6 (redirecting the clients) is completed, simply shutdown the new replica and resume the old database using the voltadmin resume command:

$ voltadmin shutdown --host=newsvr1 $ voltadmin resume --host=oldsvr1

If issues are found after Step #6, the fall back procedure is basically to repeat the upgrade procedure described in Section 4.4.3.2, “The Passive DR Upgrade Process” except reversing the roles of the clusters and replicating the data from the new cluster to the old cluster. That is:

Update the configuration file on the new cluster to enable DR as a master, removing the <connection> element:

<dr id="4" role="master"/>

Shutdown the original database and edit the configuration file to enable DR as a replica of the new cluster:

<dr id="3" role="replica"> <connection source="newsvr1,newsvr2"/> </dr>

Re-initialize and start the old cluster using the voltdb init --force and voltdb start commands.

Follow steps 3 through 8 in Section 4.4.3.2, “The Passive DR Upgrade Process” reversing the roles of the new and old clusters.

It is also possible to upgrade the VoltDB software using cross data center replication (XDCR), by simply shutting down, upgrading, and then re-initalizing each cluster, one at a time. This process requires no downtime, assuming your client applications are already designed to switch between the active clusters.

Use of XDCR for upgrading the VoltDB software is easiest if you are already using XDCR because it does not require any additional hardware or reconfiguration. The following instructions assume that is the case. Of course, you could also create a new cluster and establish XDCR replication between the old and new clusters just for the purpose of upgrading VoltDB. The steps for the upgrade outlined in the following sections are the same. But first you must establish the cross data center replication between the two (or more) clusters. See the chapter on Database Replication in the Using VoltDB manual for instructions on completing this initial step.

Once you have two clusters actively replicating data with XCDCR (let's call them clusters A and B), the steps for upgrading the VoltDB software on the clusters is as follows:

Pause and shutdown cluster A (voltadmin pause --wait and shutdown).

Clear the DR state on cluster B (voltadmin dr reset).

Update the VoltDB software on cluster A.

Start a new database instance on A, making sure to use the old deployment file so the XDCR connections are configured properly (voltdb init --force and voltdb start).

Load the schema on Cluster A so replication starts.

Once the two clusters are synchronized, repeat steps 1 through 4 for cluster B.

Note that since you are upgrading the software, you must create a new instance after the upgrade (step #3). When upgrading the software, you cannot recover the database using just the voltdb start command; you must use voltdb init --force first to create a new instance and then reload the existing data from the running cluster B.

Also, be sure all data has been copied to the upgraded cluster A after step #4 and before proceeding to upgrade the

second cluster. You can do this by checking the @Statistics system procedure selector DRCONSUMER on cluster A. Once the

DRCONSUMER statistics State column changes to "RECEIVE", you know the two clusters are properly

synchronized and you can proceed to step #5.

In extreme cases, you may decide after performing the upgrade that you do not want to use the latest version of VoltDB. If this happens, it is possible to fall back to the previous version of VoltDB.

To "downgrade" from a new version back to the previous version, follow the steps outlined in Section 4.4.4, “Performing an Online Upgrade Using Multiple XDCR Clusters” except rather than upgrading to the new version in Step #2, reinstall the older version of VoltDB. This process is valid as long as you have not modified the schema or deployment to use any new or changed features introduced in the new version.

It is possible to use XDCR and K-safety to perform an online upgrade, where the database remains accessible throughout the upgrade process, without requiring an additional cluster. As opposed to upgrading two separate XDCR clusters, this process enables an online upgrade with little or no extra hardware requirements.

On the other hand, this upgrade process is quite complex and during the upgrade, the K-safety of the database is reduced. In other words, this process trades off the need for extra hardware against a more complicated upgrade process and increased risk to availability if any nodes crash unexpectedly during the upgrade.

Essentially, the process for upgrading a cluster with limited additional hardware is to split the existing cluster hardware into two separate clusters, upgrading the software along the way. To make this possible, there are a several prerequisites:

The cluster must be configured as a XDCR cluster.

The cluster configuration must contain a <dr> element that identifies

the cluster ID and specifies the role as "xdcr". For example:

<dr id="1" role="xdcr"/>

Note that the replication itself can be disabled (that is, listen="false"), but the cluster

must have the XDCR configuration in place before the online upgrade begins.

All of the tables in the database must be identified as DR tables.

This means that the schema must specify DR TABLE

{table-name} for all of the tables, or else data for

the non-DR tables will be lost.

K-Safety must be enabled.

The K-safety value (set using the kfactor attribute of the <cluster> element in the configuration file) must be set to one or higher.

Additionally, if the K-safety value is 1 and the cluster has an odd number of nodes, you will need one additional server to complete the upgrade. (The additional server is no longer needed after the upgrade is completed.)

Table 4.1, “Overview of the Online Upgrade Process” summarizes the overall process for online upgrade.

Because the process is complicated and requires shutting down and restarting database servers in a specific order, it is important to create an upgrade plan before you begin. The voltadmin plan_upgrade command generates such a plan, listing the detailed steps for each phase based on your current cluster configuration. Before you attempt the online upgrade process, use voltadmin plan_upgrade to generate the plan and thoroughly review it to make sure you understand what is required.

Table 4.1. Overview of the Online Upgrade Process

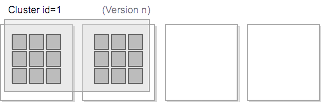

| Phase #1 | Ensure the cluster meets all the necessary prerequisites (XDCR and K-safety configured, all tables DR-enabled). Install the new VoltDB software version in the same location on all cluster nodes. If not already set, be sure to enable DR for the cluster by setting the listen attribute to true in the cluster configuration. For the purposes of demonstration, the following illustrations assume a four-node database configured with a cluster ID set to 1.

|

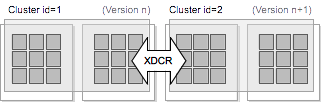

| Phase #2 | Stop half the cluster nodes. Because of K-safety, it is possible for half the servers to be removed from the cluster without stopping the database. The plan generated by voltadmin plan_upgrade will tell you exactly which nodes to stop. If the cluster has an odd number of nodes and the K-safety is set to one, you can stop the smaller "half" of the cluster and then add another server to create two equal halves.  |

| Phase #3 | Create a new cluster on the stopped nodes, using the new VoltDB software version. Be sure to configure the cluster with a new cluster ID, XDCR enabled, and listing the original cluster as the DR connection source. You can also start the new cluster using the --missing argument so although it starts with only half the nodes, it expects the full complement of nodes for full k-safety. In the example, the start command would specify --count=2 and --missing=2. After loading the schema and procedures from the original cluster, XDCR will synchronize the two clusters.  |

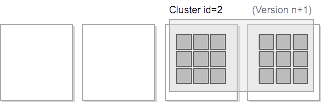

| Phase #4 | Once the clusters are synchronized, redirect all client applications to the new cluster. Stop the original cluster and reset DR on the new cluster using the voltadmin dr reset command.  |

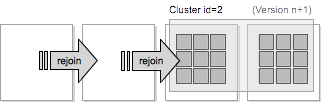

| Phase #5 | Reinitialize and rejoin the remaining nodes to the new cluster, using the new software version. Because the new cluster was started with the --missing option, the remaining nodes can join the cluster and bring it back to full K-safety. If you started with an odd number of servers and K=1 (and therefore added a server to create the second cluster) you can either rejoin all but one of the remaining nodes, or if you want to remove the specific server you added, remove it before rejoining the last node.  |

| Complete | At this point the cluster is fully upgraded.  |

In extreme cases, you may decide during or after performing the upgrade that you do not want to use the latest version of VoltDB. If this happens, it is possible to fall back to the previous version of VoltDB.

During the phases identified as #1 and #2 in Table 4.1, “Overview of the Online Upgrade Process”, you can always rejoin the stopped nodes to the original cluster to return to your original configuration.

During or after you complete phase #3, you can return to your original configuration by:

Stopping the new cluster.

Resetting DR on the original cluster (voltadmin dr reset).

Reinitializing and rejoining the stopped nodes to the original cluster using the original software version.

Once the original cluster is stopped as part of phase #4, the way to revert to the original software version is to repeat the entire procedure starting at phase #1 and reversing the direction — "downgrading" from the new software version to the old software version. This process is valid as long as you have not modified the schema or deployment to use any new or changed features introduced in the new version.

The sections describing the upgrade process for passive DR, active XDCR, and XDCR with limited hardware all explain how to fall back to the previous version of VoltDB in case of emergency. This section explains how to fall back, or downgrade, when using the standard save and restore process described in Section 4.4.1, “Upgrading VoltDB Using Save and Restore”.

First, it is always a good idea to perform a backup, or snapshot, of the database before performing any maintenance on production systems. Although the voltadmin shutdown --save command creates a snapshot in the upgrade process, which can be used to fall back in an emergency, it is always safest to have a separate snapshot with a well-known ID and location outside the database root directory.

Second, the following process works if you are reverting between two recent versions of VoltDB and you do not use any new features between the upgrade and the downgrade. There are no guarantees an attempt to downgrade will succeed if the two software versions are more than one major version apart or if you utilize a new feature from the higher version software prior to downgrading.

With those caveats, the easiest way to fall back to a previous VoltDB version, if no changes have been made to the database itself, is reinstall the older version of VoltDB, re-initialize the database root directory, then restart an empty database and restore a snapshot taken prior to the upgrade.

If changes were made to the contents of the database, you will need to take a new snapshot prior to downgrading. You can use the same process to downgrade that you used to upgrade:

Shutdown the database with voltadmin shutdown --save.

Re-install the previous version of VoltDB.

Restart the database.